Projeto 5 – SOAP Outbound Adapter

Vamos montar nossa próxima integração utilizando o adaptador SOAP Outbound Adapter. Este adaptador permite acessar um serviço SOAP externo e consumir este serviço.

No nosso exemplo vamos publicar um serviço SOAP no barramento de integração simulando um serviço externo. Vamos então consumir o WSDL deste serviço criando o BO automaticamente, e depois montaremos os BP e BS da integração.

O primeiro passo é criar um serviço que será consumido. Imagine que este é um serviço externo que você precisa consumir. Vamos então criar um BS que fará este papel para nós. Primeiro vamos criar as classes de Request e Response do nosso serviço “fake”:

Class ws.soap.msg.Request Extends Ens.Request

{

Property codigo As %Integer;

}

Class ws.soap.msg.Response Extends Ens.Response

{

Property nome As %String;

Property status As %Boolean;

Property mensagem As %String;

Property sessionId As %Integer;

}

Agora vamos criar o nosso BS que irá atender as requisições SOAP do nosso exemplo:

Class ws.soap.bs.Service Extends EnsLib.SOAP.Service

{

Parameter ADAPTER = "EnsLib.SOAP.InboundAdapter";

Parameter SERVICENAME = "consulta";

Method entrada(pInput As ws.soap.msg.Request) As ws.soap.msg.Response [ WebMethod ]

{

//

// Este serviço simula um serviço SOAP externo para ser utilizado para testes

//

Set tResponse=##Class(ws.soap.msg.Response).%New()

If pInput.codigo<10&(pInput.codigo>0)

{

Set nome=$Piece("Julio^Sofia^Marcio^Liana^Julia^Pedro^Fernanda^Abel^Maria","^",pInput.codigo)

Set tResponse.nome = nome

Set tResponse.status = 1

Set tResponse.mensagem=""

} Else {

Set tResponse.nome = ""

Set tResponse.status = 0

Set tResponse.mensagem = "Codigo fora do limite (1-9)"

}

Quit tResponse

}

}

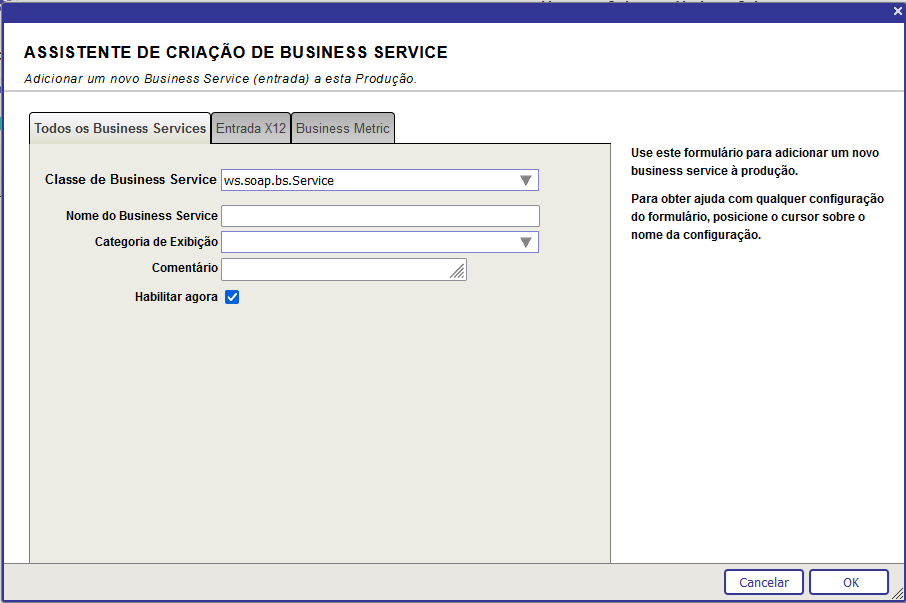

Vamos agora colocar nosso BS na production. Abra o Painel de Administração e vá para a nossa production de teste. Clique no botão (+) ao lado do nome Services e preencha a tela a seguir com os dados apresentados:

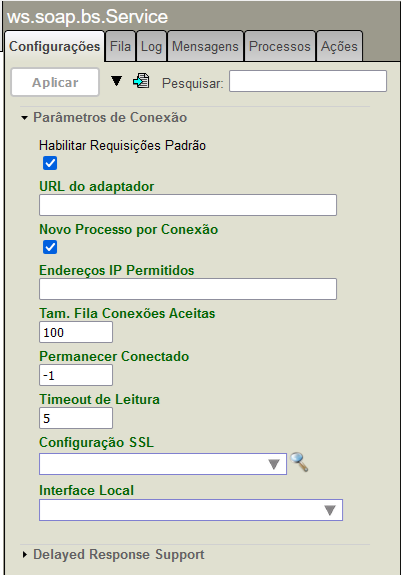

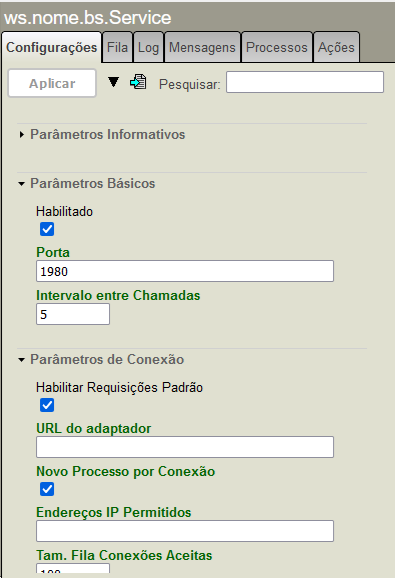

Agora volte a production e clique no nome do nosso BS (ws.soap.bs.Service) e veja a configuração dele. Expanda a área Parâmetros de Conexão e marque a caixa Habilitar Requisições Padrão conforme a tela abaixo:

A seguir clique em Atualizar e nosso BS de teste estará pronto.

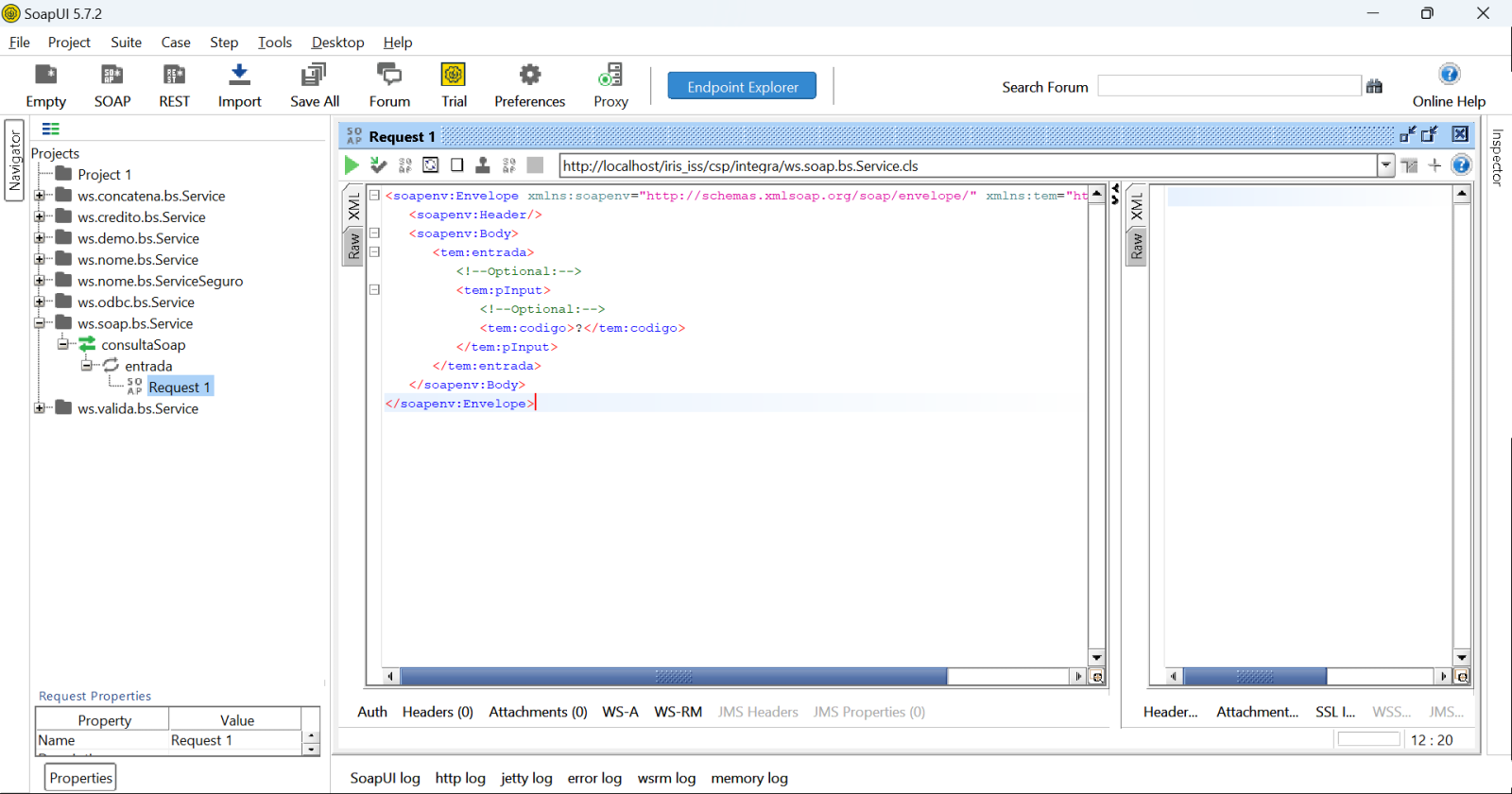

Agora vamos testar nosso serviço SOAP. Vamos abrir o SoapUI e importar o WSDL. O endereço do WSDL do nosso serviço é http://localhost/iris_iss/csp/integra/ws.soap.bs.Service.cls?WSDL=1 para a minha configuração.

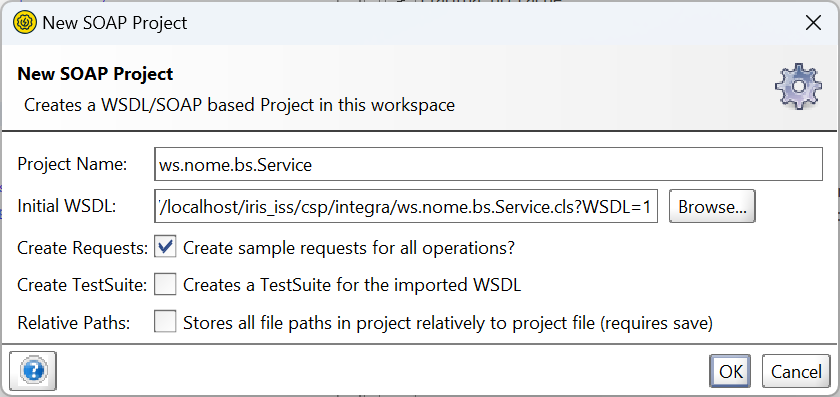

Abra então o SoapUI e importe o WSDL. Vá em File->New SOAP Project e na janela que será apresentada coloque o endereço do WSDL na caixa Initial WSDL e a seguir clique em OK:

Project Name será preenchido automaticamente. Após o OK será disponibilizada a interface de teste do serviço:

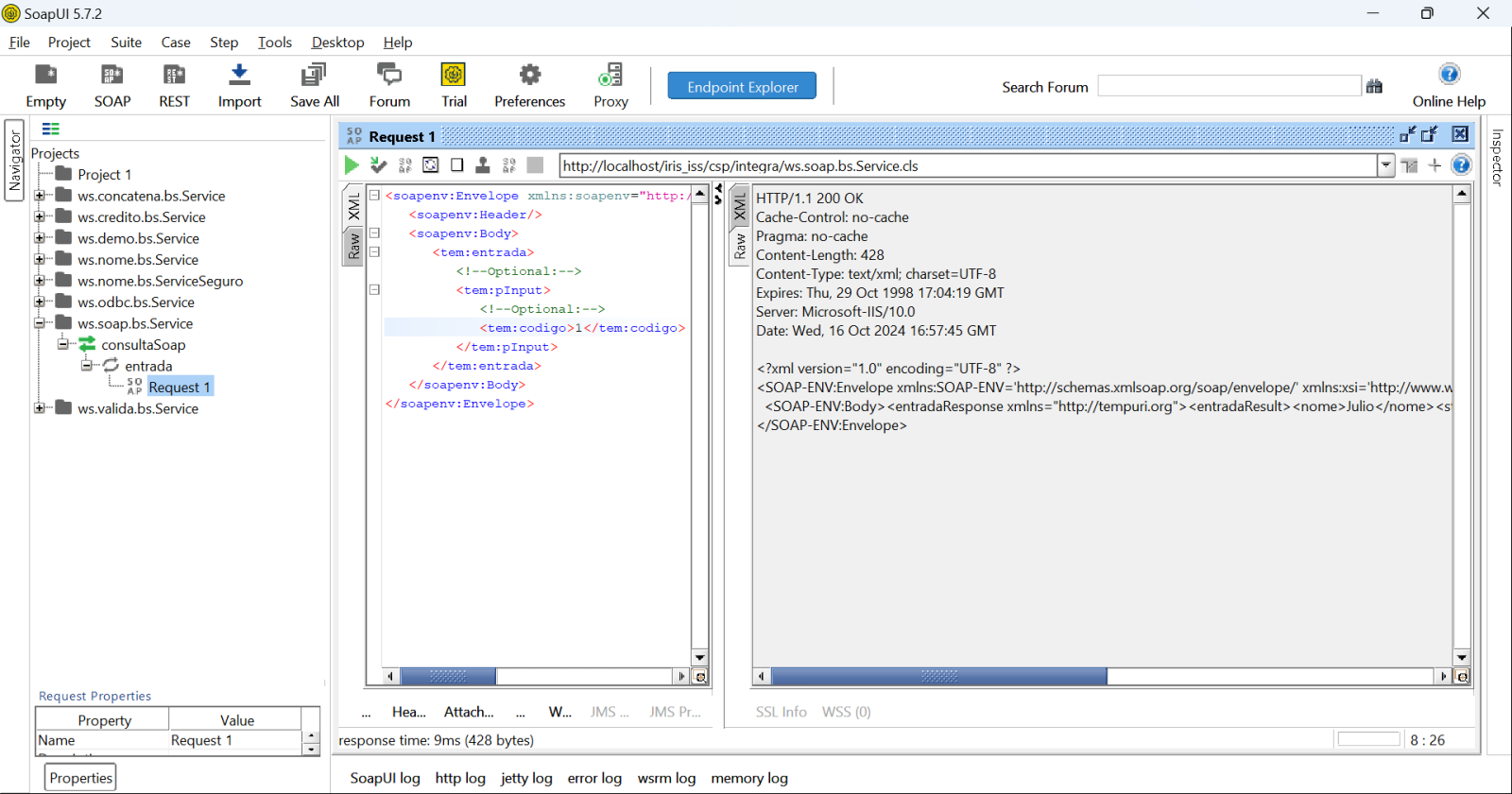

Substitua o ? no XML por um valor entre 1 e 9 e veja o resultado:

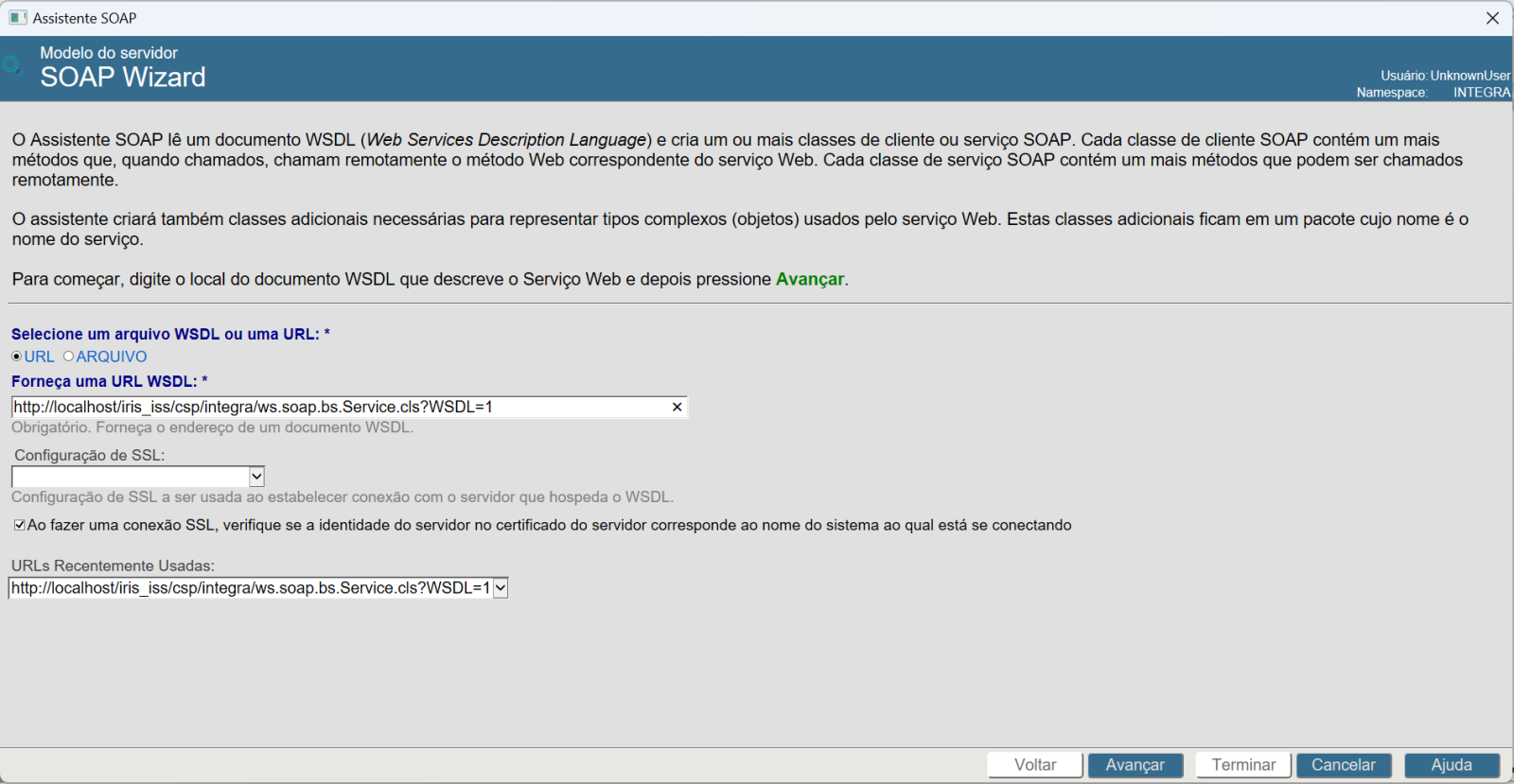

Veja que recebemos o nome que corresponde ao código informado. Agora que temos nosso serviço pronto vamos então construir nossa integração para consumi-lo. Lembre do endereço do WSDL do serviço que criamos. Vamos utiliza-lo novamente para criar o nosso BO. Para isso vá no Studio e no menu selecione Ferramentas->Suplementos->Assistente SOAP:

Informe o endereço do WSDL que se deseja consumir e clique em Avançar:

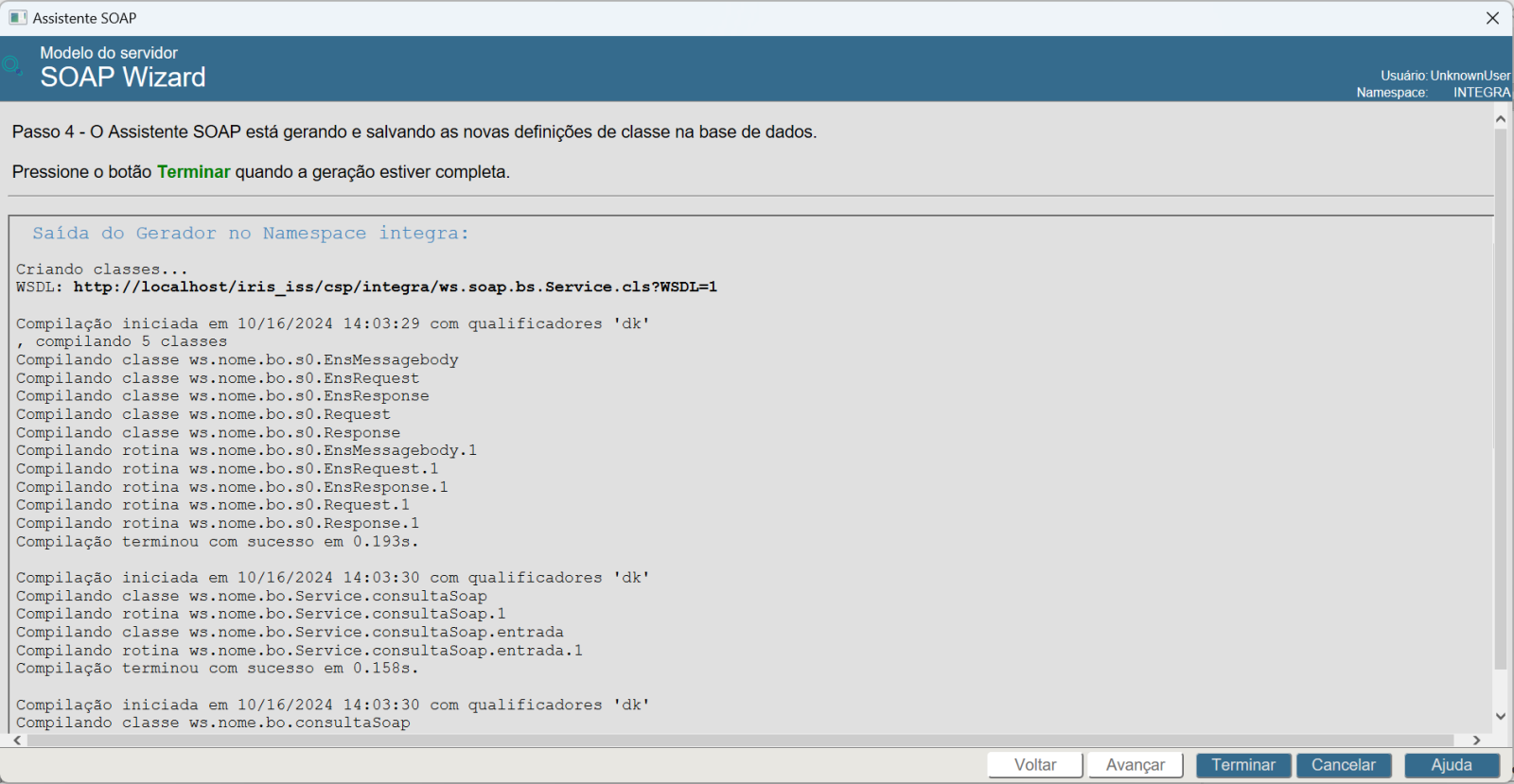

Marque as caixas Criar Serviço Web e Criar Business Operation. Preencha as caixas Pacote do Business Operation, Pacote o Objeto de Requisição, Pacote do Objeto de Resposta e Pacote da Classe proxy conforme a tela acima. A seguir clique em Avançar:

Nesta próxima tela clique em Avançar:

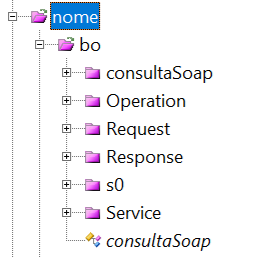

E nesta última tela em Terminar. Pronto, já temos o código do nosso BO pronto. Abrindo a árvore do namespace no Studio veremos as classes e pacotes criados:

Navegue em ws->nome->bo->Operation e localize a classde consultaSoap. Abra a classe no studio e veja o adaptador que ela utiliza:

.png)

Veja que estamos utilizando o SOAP Outbound Adapter. Toda a construção do nosso BO foi feita pelo Wizard do Studio baseado no WSDL que nós informamos.

Vamos agora criar o nosso BS. Ele será também um BS que utiliza o adaptador SOAP Inbound. Vamos primeiro criar as classes de Request e Response do nosso serviço e depois o próprio BS:

Abaixo o código do Request:

Class ws.nome.msg.Request Extends Ens.Request

{

Property codigo As %Integer;

}

E a seguir o código do Response:

Class ws.nome.msg.Response Extends Ens.Response

{

Property nome As %String;

Property status As %Boolean;

Property mensagem As %String;

Property sessionId As %Integer;

}

Agora o código do BS:

Class ws.nome.bs.Service Extends EnsLib.SOAP.Service

{

Parameter ADAPTER = "EnsLib.SOAP.InboundAdapter";

Parameter SERVICENAME = "nome";

Method entrada(pInput As ws.nome.msg.Request) As ws.nome.msg.Response [ WebMethod ]

{

Set tSC=..SendRequestSync("bpNome",pInput,.tResponse)

If $$$ISERR(tSC) Do ..ReturnMethodStatusFault(tSC)

Quit tResponse

}

}

Note que Request tem como superclasse Ens.Request e Response tem como superclasse Ens.Response. Isso é importante para que as funcionalidades do barramento estejam disponíveis nas mensagens.

Note também que nosso BS usa o adaptador EnsLib.SOAP.InboundAdapter. Isso habilita nosso BS a publicar o serviço SOAP. Vamos precisar depois realizar algumas configurações no BS, mas faremos isso quando formos coloca-lo na produção.

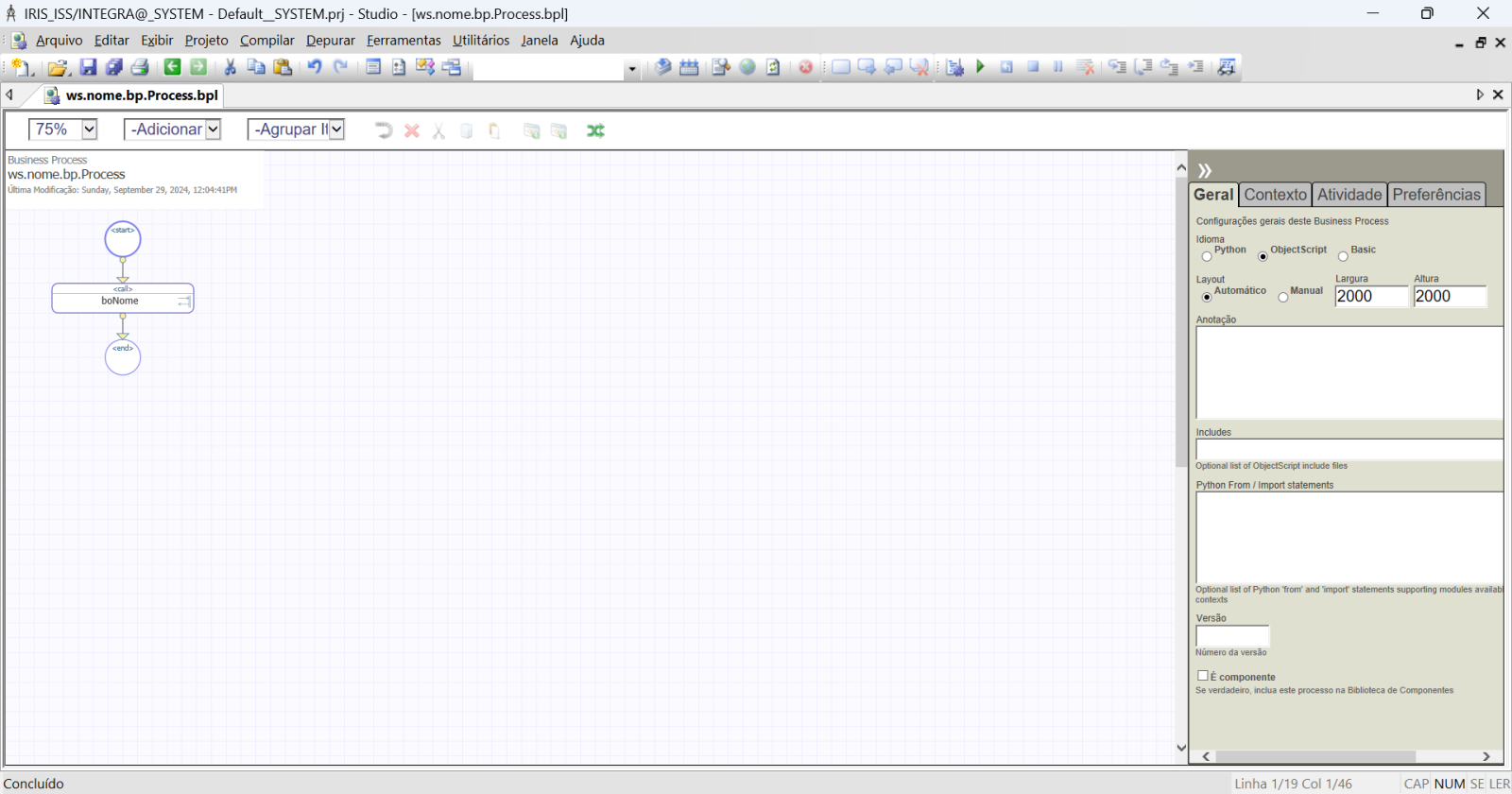

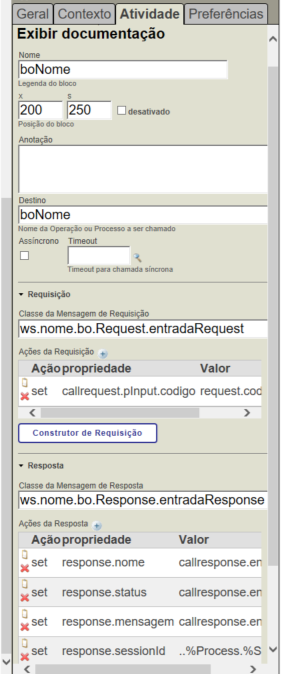

Agora, por fim, vamos montar nosso BP, que é o orquestrador das chamadas da integração.

Configure nosso componente CALL da seguinte forma:

O código do nosso BP ficará assim:

///

Class ws.nome.bp.Process Extends Ens.BusinessProcessBPL [ ClassType = persistent, ProcedureBlock ]

{

/// BPL Definition

XData BPL [ XMLNamespace = "http://www.intersystems.com/bpl" ]

{

<process language='objectscript' request='ws.nome.msg.Request' response='ws.nome.msg.Response' height='2000' width='2000' >

<context>

<property name='valido' type='%Boolean' initialexpression='0' instantiate='0' />

</context>

<sequence xend='200' yend='350' >

<call name='boNome' target='boNome' async='0' xpos='200' ypos='250' >

<request type='ws.nome.bo.Request.entradaRequest' >

<assign property="callrequest.pInput.codigo" value="request.codigo" action="set" languageOverride="" />

</request>

<response type='ws.nome.bo.Response.entradaResponse' >

<assign property="response.nome" value="callresponse.entradaResult.nome" action="set" languageOverride="" />

<assign property="response.status" value="callresponse.entradaResult.status" action="set" languageOverride="" />

<assign property="response.mensagem" value="callresponse.entradaResult.mensagem" action="set" languageOverride="" />

<assign property="response.sessionId" value="..%Process.%SessionId" action="set" languageOverride="" />

</response>

</call>

</sequence>

</process>

}

Storage Default

{

<Type>%Storage.Persistent</Type>

}

}

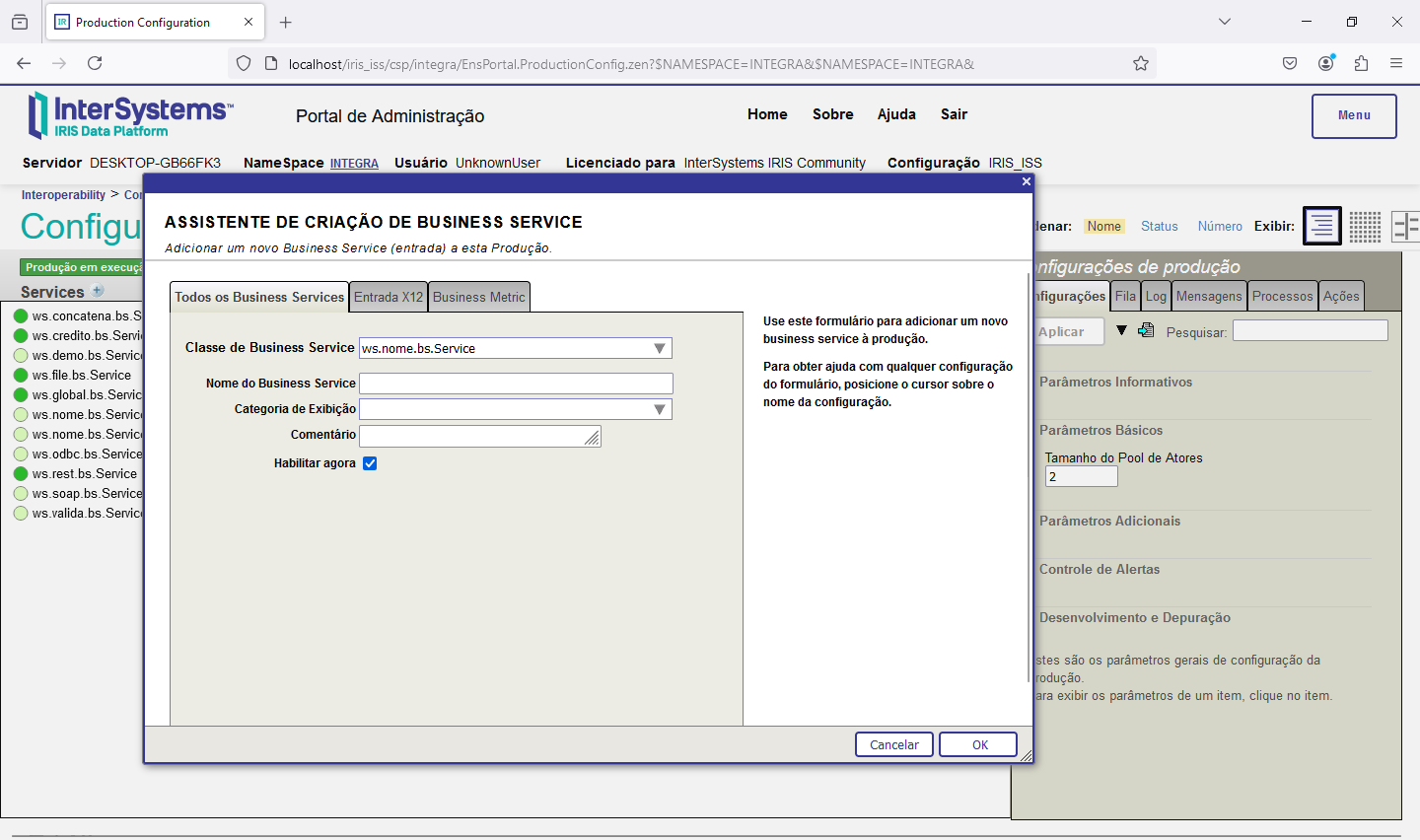

Agora vamos utilizar a production que criamos no exemplo anterior e colocar as classes nela e realizar algumas configurações. Vamos colocar nossos componentes na production. Clique no botão (+) ao lado do título Services e inclua o nosso BS conforme a tela abaixo:

Clique em OK e a seguir clique sobre o nome do nosso BS para completarmos a configuração. Expanda a área Parâmetros de Conexão e marque a caixa Habilitar Requisições Padrão:

Clique agora em Aplicar e pronto. Nosso BS está configurado.

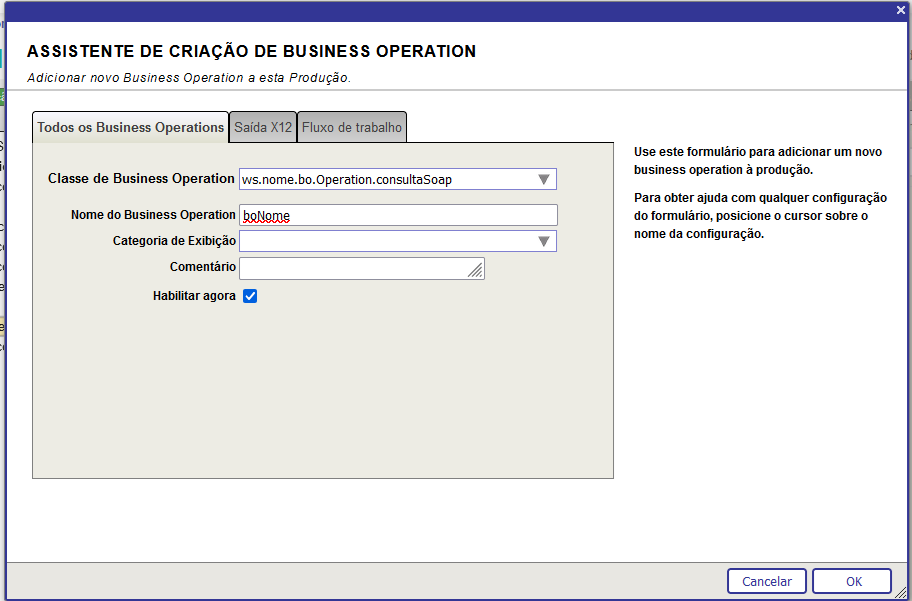

Agora vamos passar para o nosso BO. O processo é o mesmo, ou seja, clique no botão (+) ao lado do título Operations e preencha a tela que será apresentada:

Pronto. Nosso BO está configurado na production.

A seguir clique no botão (+) ao lado do título Process para incluir o nosso BP:

Preencha a tela conforme acima e clique em OK.

Temos nossos 3 componentes configurados na produção. Clique na bola verde ao lado de bpGlobalIncluir para ver as conexões entre os componentes:

Agora vamos verificar o WSDL do nosso BS. Abra no navegador e vá para o endereço http://localhost/iris_iss/csp/integra/ws.nome.bs.Service.cls?WSDL=1 e veja o WSDL do nosso serviço:

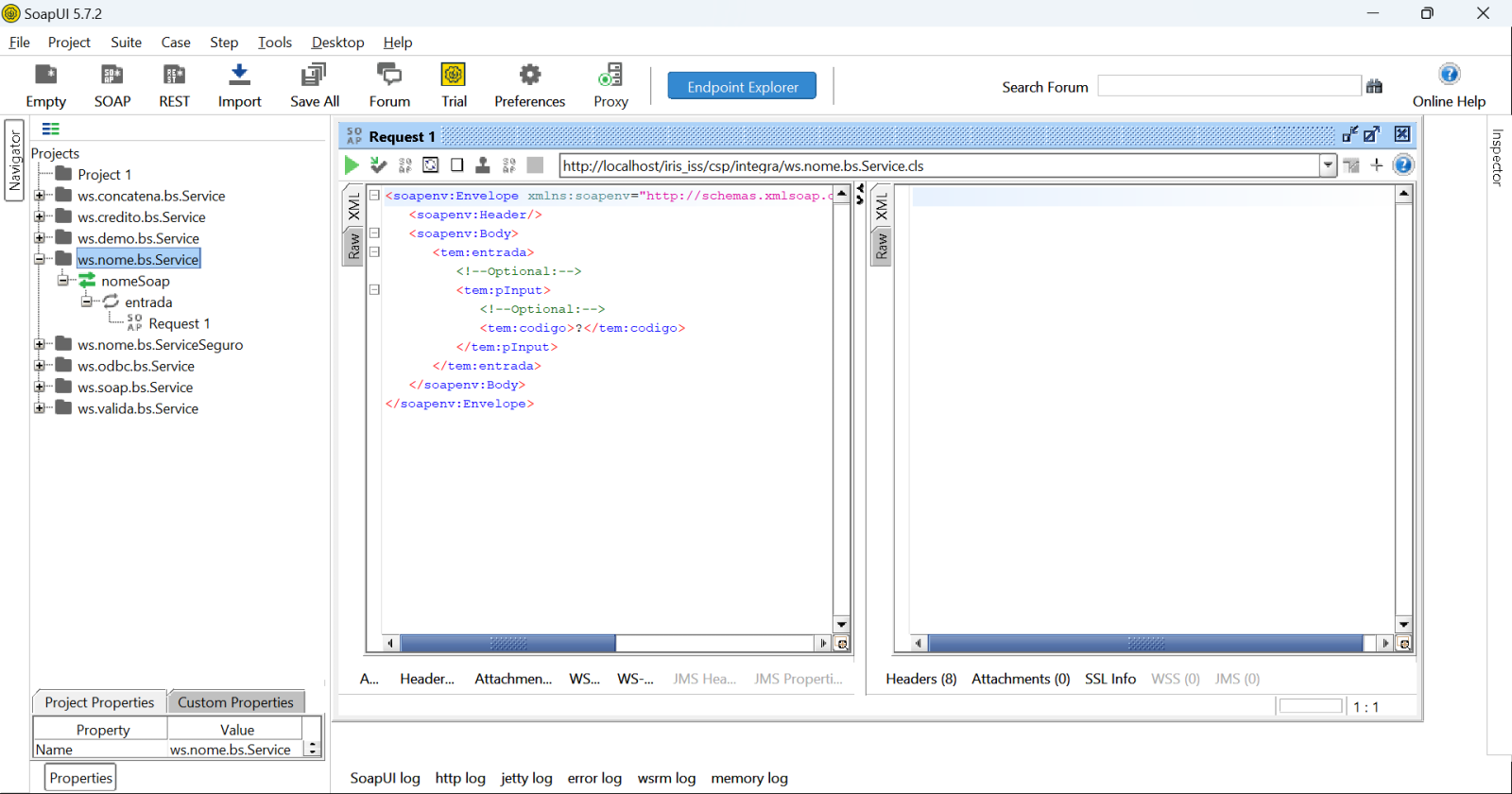

Volte ao SoapUI e novamente clique em File->Create a SOAP Project e preencha a tela:

Preencha a caixa Initial WSDL e a caixa Project Name será preenchida automaticamente. Clique em OK e veja a interface de teste criada:

Informe um código e clique no icone de Play. Veja o resultado ao ladoa:

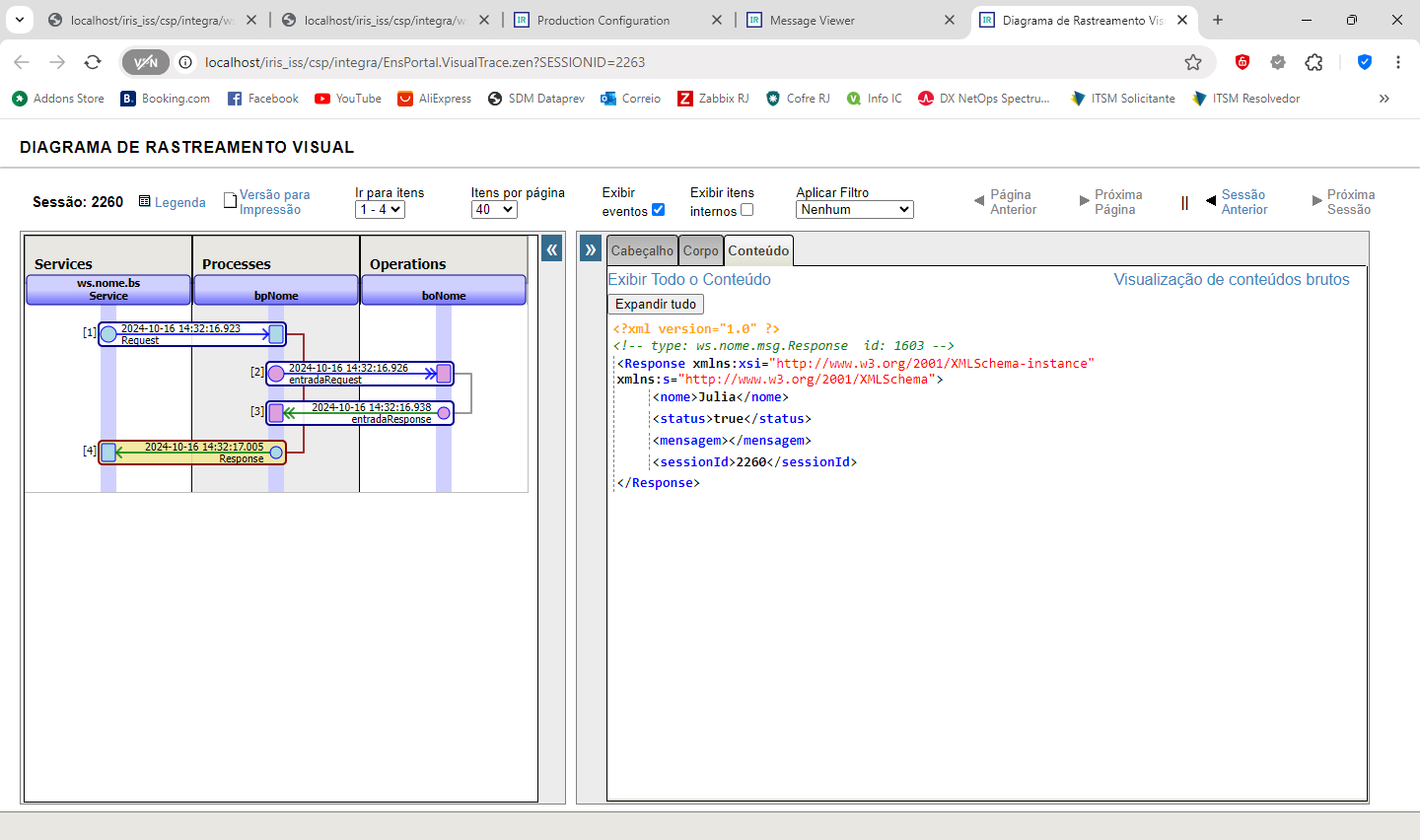

Podemos ver o trace de execução desta chamada:

Com isso concluímos esta integração. Utilizamos em nosso teste o IRIS 2024.1 que está disponível para download na sua versão Community na internet.

.png)

.png)